In the previous article, we used Terraform output variables in an Azure DevOps YAML Pipeline. In this article we will go further by displaying the summary of changes as a comment in the PR.

In the previous article, we used Terraform output variables in an Azure DevOps YAML Pipeline. In this article we will go further by displaying the summary of changes as a comment in the PR.

Continue reading

Using Terraform output values in Azure DevOps pipeline

Standard When working with Terraform to deploy an infrastructure in an Azure DevOps pipeline, it is useful to use the output values in the following steps of your pipeline. Let’s see how we can achieve this easily.

When working with Terraform to deploy an infrastructure in an Azure DevOps pipeline, it is useful to use the output values in the following steps of your pipeline. Let’s see how we can achieve this easily.

Prevent breaking changes to your APIs with Roslyn

StandardA while ago, I wrote about ensuring the correctness of your api and introduced tests to check that you don’t break the contracts. Hopefully, Microsoft introduced a Roslyn analyzer to fulfill this duty in a better manner and help you prevent breaking changes to your APIs. Here’s how !

Set-up the analyzer

The analyzer is contained in a NuGet package that you can install on your .Net Framework and .Net Core projects. Its name is Microsoft.CodeAnalysis.PublicApiAnalyzers (version 2.9.4 at the time of writing).

Installing should be quite usual. Once the analyzer installed, you can start to configure it.

In order to work, you need to add two files in the projects you want to analyze : PublicApi.Shipped.txt and PublicApi.Unshipped.txt to the solution.

In order for the analyzer to take them in account, you have to declare those two files as “Additional Files” in your csproj (having them as Content doesn’t work) :

<AdditionalFiles Include="PublicAPI.Shipped.txt" />

<AdditionalFiles Include="PublicAPI.Unshipped.txt" />

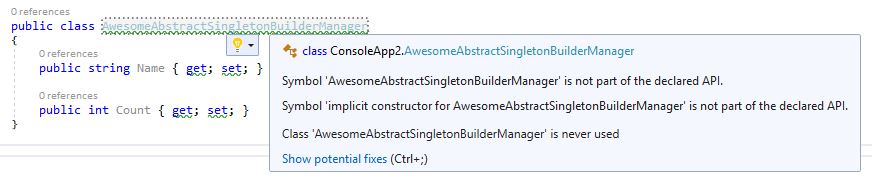

With those two files set, you will see that all your public members/classes will have squiggles suggesting that they’re not part of the API and therefore you should add them.

Hopefully, the light bulb is here to help. You click on it to fix this and add all the public members to those two files.

Shipped, unshipped

By default, all added members by the Roslyn fix will be written in the PublicApi.Unshipped.txt file.

It’s up to you on how you manage the content of those two files according to your release/branching strategy.

However, here are few tips I could advise.

If you’re working with a Git flow branching strategy, all the changes should go into the unshipped file. When making the PR, reviewers have to check two things : shipped file didn’t change, unshipped file changes don’t break the compatibility (unless wanted).

Once merged and integrated into develop branch, all the changes are going to stack in the file.

When preparing a release (and therefore creating the branch to) you can move all the lines to the shipped file as you’re shipping those members in the API.

If working with GitHub flow, same should be done before merging the PR to master.

Break all the things !

Ok, now you’re able to track all the changes to your API. However, when making a change to it, you’ll see only warnings in Visual Studio (or your build pipeline).

If you want to go further (and I suggest you do), you can treat several warnings as errors in your csproj :

<WarningsAsErrors>$(WarningsAsErrors);RS0016;RS0017;RS0022;RS0024;RS0025;RS0026;RS0027</WarningsAsErrors>

You can look for more information about the rules on GitHub.

Now, you’re ready to ship well your APIs !

Take advantage of secrets to store ASP.Net Core Kestrel certificates in Docker Swarm

StandardWhen working with ASP.Net Core in Docker containers, it can be cumbersome to deal with certificates. While there is a documentation about setting certificate for dev environment, there’s no real guidance on how to make it work when deploying containers in a Swarm for example.

In this article we are going to see how to take advantage of Docker secrets to store ASP.Net Core Kestrel certificates in the context of Docker Swarm.

Using ASP.Net Webform Dependency Injection with .NET 4.7.2

Standard Starting with .NET 4.7.2 (released April, 30st), Microsoft offers an endpoint to plug our favorite dependency injection container when developing ASP.Net Webforms applications, making possible to inject dependencies into UserControls, Pages and MasterPages.

Starting with .NET 4.7.2 (released April, 30st), Microsoft offers an endpoint to plug our favorite dependency injection container when developing ASP.Net Webforms applications, making possible to inject dependencies into UserControls, Pages and MasterPages.

In this article we are going to see how to build an adaptation layer to plug Autofac or the container used in ASP.Net Core.

Continue reading

Ensuring the correctness of your API

StandardDesigning and maintaining an API is hard. Whether you’re distributing assemblies, creating NuGet packages or exposing services (such as WCF, SOAP or REST), you must pay attention to the contracts you provide. The contract must be well designed for the consumer and must be stable over time. In this article, we’re going to see some rules to follow, tips to help and tools to use to ensure that your APIs are great to use.

Although this post is applied to the .Net environment, most of it can be used with other environments (Java, Node, Python, etc.).

Continue reading

Securing the connection between Consul and ASP.Net Core

StandardThe previous article introduced how to use Consul to store configuration of ASP.Net Core (or .Net also). However, it was missing an important thing : the security ! In this article, we will see how we can address this by using ACLs mechanism built into Consul with the previously developed code in order to secure the connection between Consul and ASP.Net Core.

Continue reading

Using Consul for storing the configuration in ASP.Net Core

Standard Consul from Hashicorp is a tool used in distributed architectures to allow service discovery, health checking and kv storage for configuration. This article details how to use Consul for storing the configuration in ASP.Net Core by implementing a ConfigurationProvider.

Consul from Hashicorp is a tool used in distributed architectures to allow service discovery, health checking and kv storage for configuration. This article details how to use Consul for storing the configuration in ASP.Net Core by implementing a ConfigurationProvider.

Continue reading

Deploying Sonarqube on Azure WebApp for Containers

StandardSonarqube is a tool for developers to track quality of a project. It provides a dashboard to view issues on a code base and integrates nicely with VSTS for analyzing pull-requests, a good way to always improve the quality on our apps.

Deploying, running and maintaining Sonarqube can however be a little troublesome. Usually, it’s done inside a VM that needs to be maintained, secured, etc. Even in Azure, a VM needs maintenance.

What if we could use the power of other cloud services to host our Sonarqube. The database could easily go inside a SQL Azure. But what about hosting the app ? Hosting in a container offering (ACS/AKS) can be a little complicated to handle (and deploying a full Kubernetes cluster for just Sonarqube is a little bit too extreme). Azure Container Instance (ACI) is quite expensive for running a container in a permanent way.

Therefore, it leaves us with WebApp for Containers, which allows us to run a container inside the context of App Service for Linux, the main interest is that everything is managed : from running and updating the host to certificate management and custom domains.

Continue reading

DevOps Mobile avec VSTS et HockeyApp, la vidéo

StandardMercredi 15 juin, j’ai eu l’occasion d’animer avec Mathilde Roussel une session pour le meet-up Cross-Platform Paris.

Le sujet était le devops pour les applications mobiles avec VSTS (Visual Studio Team Services) et HockeyApp, deux produits de Microsoft.

A l’aide d’une application Android, nous avons montré le concept de pipeline de build, les tests unitaires et UI, ainsi que le déploiement de betas et la gestion des feedbacks et crashs.

Vous pouvez retrouver la vidéo ci-dessous.